In automatic image annotation, we try to automatically describe image content by keywords or eventually a piece of text. This is much needed in the current situation when huge amounts of image data are created every day but only a small part of this is manually annotated, while most of data searching still relies on text. Intensive research in this area has therefore been conducted in recent years and many different approaches have been proposed. Our team is developing an automatic annotation framework that follows a search-based paradigm, i.e. tries to determine what is in an image by searching for visually similar images and processing their descriptions.

MUFIN Annotation Framework

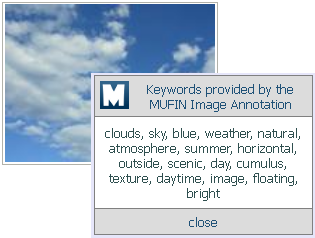

The MUFIN Annotation Framework is a software project that consists of different automatic annotation tools developed by our laboratory. It exploits content-based image retrieval provided by the MUFIN Image Search engine and provides different tools that work with the search results and try to select the most relevant keywords for a given input image. In the following, we provide a more detailed description of these techniques and two software tools that allow anybody to experiment with the MUFIN annotations.

Image search phase

Since the only input for the annotation-forming is the image itself, the processing needs to be based on the visual content of the image. Therefore, we first extract visual content descriptors from the image and submit these to MUFIN Image Search engine. The engine searches a suitable set of well-described images and returns a set of similar images, which can be used for further processing. In the current implementation, we use the DeCAF visual descriptors and 20 million images from Profimedia fotostock as the underlying database. This dataset contains images with rich text metadata which is used in further processing.

Text transformation phase

After the evaluation of the similarity search, text transformation phase is initiated. Different modules of the Annotation Framework are employed to clean and analyze the descriptions of similar images and to detect the most common concepts. Individual modules use different external knowledge sources, especially dictionaries and the WordNet semantic database. Rich information about relationships between words provided by the WordNet are used to discover semantic connections between candidate keywords and to cluster words according to their meaning. The candidate words receive probability coefficients derived from the visual relevance of the source image and the semantic relatedness to other keywords in the candidate set. The final annotation for the query image is formed by a set of words with the highest probability coefficient.

Visual Content Ontology

Recently, the framework was extended by a categorization taxonomy that improves the result accuracy and also rises the information level of the outputs. Using such a taxonomy of important visual concepts, users are able to specify the level of desired generality level of the output texts, or obtain more specific words from selected classes. The taxonomy provides different point of view on the WordNet concepts; it categorizes them into individual classes convenient for image annotation. More detailed information is available on a dedicated VCO page.

Relevance Feedback

So far, only automatic text extraction methods were discussed utilizing some external information sources (vocabularies, WordNet, ontology). Methods utilizing such sources produce very interesting results and it certainly poses a great space for further development, but there exist situations (abstract pictures, detail of larger image, blurred images etc.) when human reasoning is irreplaceable. Thus, we also aimed our focus on the development of iterative relevance feedback module for the Annotation Framework. This way, the user is able to take part in the annotation-forming process, positively or negatively evaluate the annotation parts and therefore influence the overall form of the final annotation.

With the relevance feedback component, the annotation process is expected to run in several iterations. Initially, the input image is annotated automatically by the Annotation Tool. Resulting annotations are provided to user who evaluates their relevance. The Annotation process is then restarted, but this time the process receives also the additional information retrieved by the user. This principle is meant to improve the initial Image search phase and therefore rise the relevance level of the processed textual data. The user feedback phase may repeat several times, until the user is satisfied with the result.

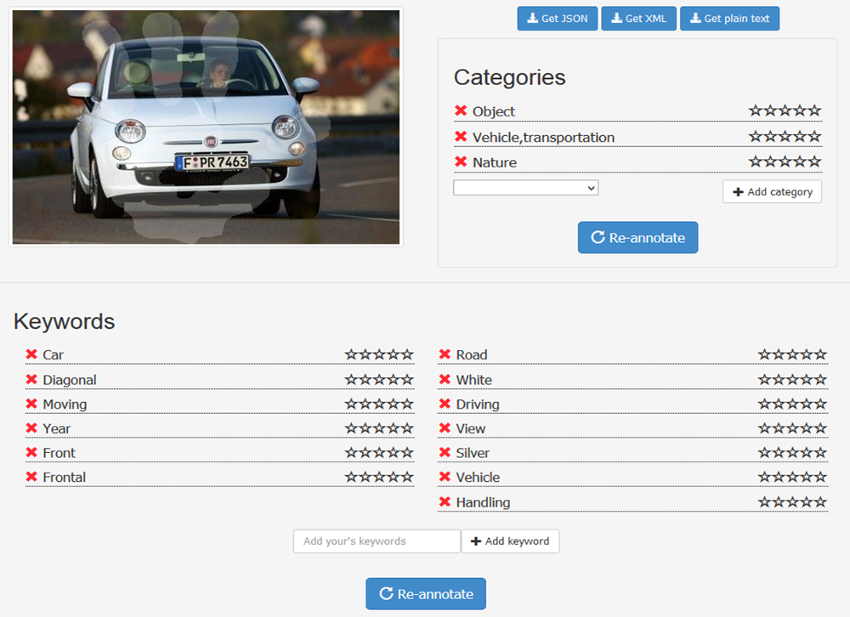

Image Annotation Web application

Using the MUFIN Image Annotation web application, you can ask for annotation of any image by either providing its URL or uploading the image file. In the current – prototype – implementation, several testing images are also available. The application provides annotation divided into categories and keywords, which can be either exported into various formats or further refined in a relevance feedback cycle. In the latter case, users need to evaluate some of the retrieved concepts, using the number of stars to express the relevance (the more stars, the more relevant the concept is). It is also possible to add a new keyword or category. When the feedback is submitted, the image becomes re-annotated with respect to the user’s opinion.

License

The presented software tools are the result of the project GAP103/10/0886 (Czech Science Foundation programme). The owner of the result is Masaryk University, a public high school, ID: 00216224. Masaryk University allows other companies and individuals to use this software free of charge and without territorial restrictions under the terms of the GPL v3 license.

This permission is granted for the duration of property rights.

This software is not subject to special information treatment according to Act No. 412/2005 Coll., as amended. In case that a person who will use the software under this license offer violates the license terms, the permission to use the software terminates.

Download

MUFIN Annotation Framework source code

release date 1. 12. 2014

Related publications

- P. Budikova, M. Batko, P. Zezula: Multi-modal image retrieval for search-based image annotation with RF. 2018 IEEE International Symposium on Multimedia (ISM 2018)

- P. Budikova, M. Batko, P. Zezula: ConceptRank for search-based image annotation. Multimedia Tools and Applications, 77(7): 8847-8882 (2018)

- P. Budikova, M. Batko, J. Botorek, P. Zezula: Search-based image annotation: Extracting semantics from similar images. In Experimental IR Meets Multilinguality, Multimodality, and Interaction – 6th International Conference of the CLEF Association (CLEF 2015).

- P. Budikova, J. Botorek, M. Batko, P. Zezula: DISA at ImageCLEF 2014: The Search-based Solution for Scalable Image Annotation. In CLEF 2014 Evaluation Labs and Workshop, Online Working Notes.

- M. Batko, J. Botorek, P. Budikova, P. Zezula: Content-Based Annotation and Classification Framework: A General Multi-Purpose Approach. In Proceedings of the 17th International Database Engineering & Applications Symposium (IDEAS 2013).

- P. Budikova, M. Batko, P. Zezula: MUFIN at ImageCLEF 2011: Success or Failure?. In CLEF 2011 Labs and Workshop, Notebook Papers.

- P. Budikova, M. Batko, P. Zezula: Online Image Annotation. In 4th International Conference on Similarity Search and Applications (SISAP 2011).