MotionMatch is a software technology for recognizing persons according to the way they walk. The recognition process is based on analysis of motion capture data which can be acquired by motion capturing devices, including popular Microsoft Kinect and ASUS Xtion. The acquired data are firstly preprocessed by detecting walking cycles and extracting movement features in form of relative velocities of the specific joints for each walking cycle. Then individual walking cycles can be mutually compared to calculate their similarity. A proposed classification method is finally used to recognize the person who has performed a query motion. The current version of the MotionMatch technology is demonstrated via a ​web application that allows users to select a query motion and verify whether the technology recognizes the query person correctly.

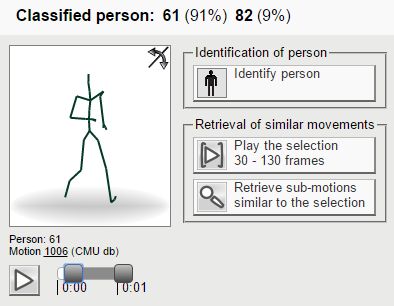

The demonstration application indexes more than 12 hours of human motion data (about 5.4 video frames) divided into 2,515 motions. Each motion represents a given kind of activity performed by a given actor. A single motion is represented by a sequence of poses that are drawn on the web-page canvas in form of human stick figures (each database motion has assigned a different color). The displayed stick figure is drawn for a current frame that can be changed by moving a range slider located under the canvas (the slider length corresponds to the motion length). Each motion can be processed to identify the actor identity by clicking the “Identify person” button. After clicking this button, walk cycles are detected within the selected motion and used to retrieve the nine most similar walk cycles within the database of indexed motions. The retrieved motions are finally classified to estimate the person identity, which is anonymized by its id.

Another advantage of MotionMatch is its ability to efficiently search for the most similar movements (sub-motions) within the indexed motions. This functionality allows users to specify a query sub-motion by setting its beginning and ending frame. After the query sub-motion selection and clicking the “Retrieve sub-motions similar to the selection” button, the technology is utilized to retrieve query-similar sub-motions which are then displayed on a web page and ordered from the left to right according to their similarity score. The number of the retrieved sub-motions is limited to the 50 most similar ones. A new query can also be defined by selecting a query motion from the retrieved results.

Related Publications

- J. Valcik, J. Sedmidubsky, P. Zezula: Assessing similarity models for human-motion retrieval applications. Computer Animation and Virtual Worlds, John Wiley & Sons Ltd. ISSN 1546-427X, 2015.

- J. Sedmidubsky, J. Valcik: Retrieving Similar Movements in Motion Capture Data. In 6th International Conference on Similarity Search and Applications (SISAP 2013). Springer-Verlag, 2013.

- J. Sedmidubsky, J. Valcik, P. Zezula: A Key-Pose Similarity Algorithm for Motion Data Retrieval. In 12th International Conference on Advanced Concepts for Intelligent Vision Systems (ACIVS 2013). Springer-Verlag, 2013.

Open-source License

The MotionMatch technology is implemented in Java. The web application demonstrating functionality of this technology is implemented as a Java web application for Apache Tomcat. Both implementations are provided as open-source. The core of implementations can be downloaded here. For further information about the technology and web application can be acquired by contacting us at address: xsedmid [at] fi.muni.cz.

The MotionMatch technology is developed under the project Efficient Searching in Large Biometric Data (No. VG20122015073) within the security-research programme (BV II/2-VS) funded by Ministry of the Interior of the Czech Republic.